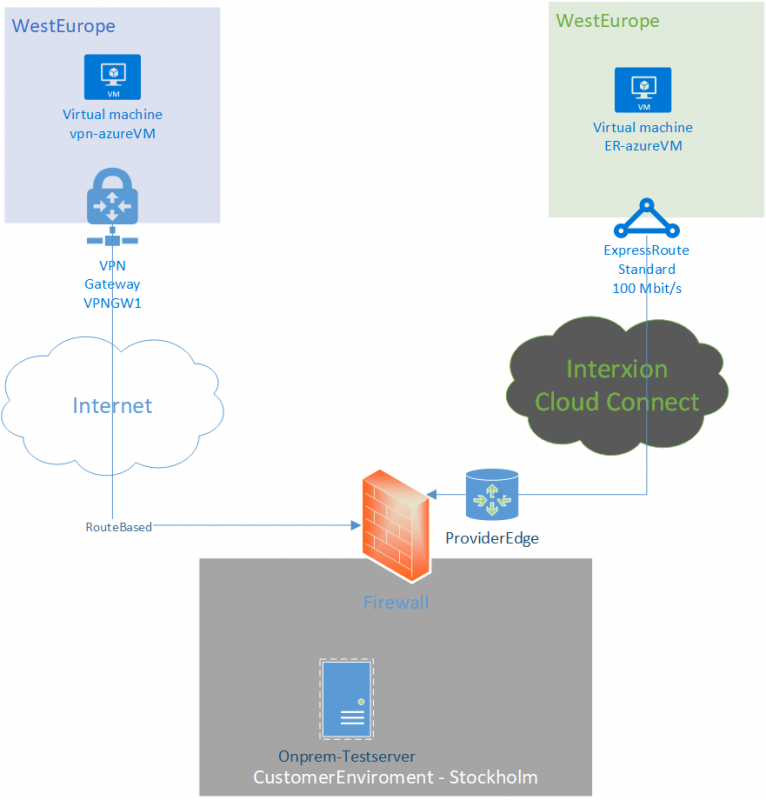

In certain scenarios, some companies are using public IPs for internal purposes. This more common in education or larger old enterprises as they got assigned a sizeable public IP range. This creates some unique challanges for Azure Firewall in combination with ExpressRoute or VPN.

By default, Azure firewall will source NAT communication with IP adresses not defined in the RFC 1918 space (10.0.0.0/8, 172.16.0.0/12 and 192.168.0.0/16).

If the none RFC1918 space is coming from ExpressRoute or VPN, it will source NAT to one of the Azure Firewall interfaces. For example if you got 192.168.0.0/26 defined as your AzureFirewallSubnet, it can be 192.168.0.6 for example. This is choosen “random”, since AzureFirewall consist of at least 2 instances behind the scene. Hence, if a virtual machine (Virtual Machine Windows) in Azure with the source IP of 172.0.0.10, sitting “behind” the firewall, communicating with an on-premise virtual machine (Virtual Machine Linux) with the IP of 30.30.30.10, the target machine, will see one of the Azure Firewall IPs as source IP, for example 192.168.0.5.

For certain applications, this can brake functionality and therefor not a desired behaviour. Lucikly Microsoft released a new feature, where we can defined our own ranges, that should be excluded from source NAT. From Azure Portal, navigate to the Firewall and press Private IP range.

Here, already defined is IANA Private ranges (RFC1918), here we can add our 30.30.30.0/16 range, to make it excluded from Source NAT. After change is applied. Virtual Machine Linux will see 172.16.0.10 of Virtual Machine Windows as the source IP, instead of the Azure Firewall Internal IP.

Via ARM template

If you want to add this via ARM templates instead, add the following snippet under the properties configuration

"additionalProperties": {

"Network.SNAT.PrivateRanges": "IANAPrivateRanges , 30.30.30.0/24"

},

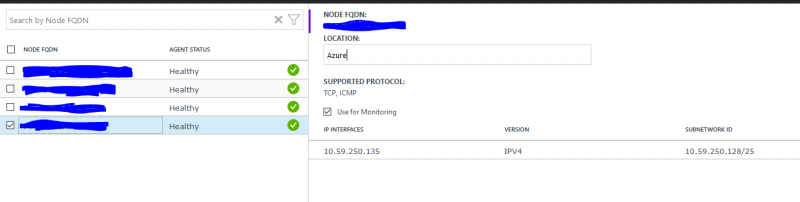

Note: If the OMS agent are not able to traverse with TCP 8084, only ICMP will been seen under Supported Protocol.

Note: If the OMS agent are not able to traverse with TCP 8084, only ICMP will been seen under Supported Protocol.