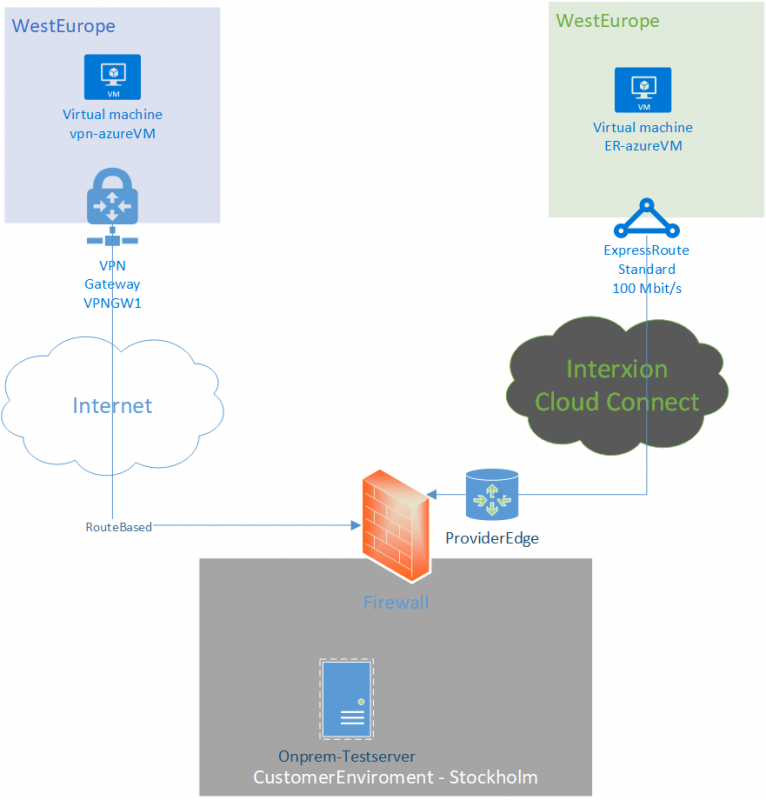

In our previous part, the Firewall policy was created. In this part we will take the policy, do a few minor changes and convert the Firewall we got our framework from.

To start of, the Firewall Policy in itself will not do “anything” until it is applied to Azure Firewall resource (or Secured hub, that is Azure Firewall inside Virtual WAN). The Azure Firewall Policy created can be applied to new Firewall or converting existing firewalls. For this particular case, converting existing Azure Firewall is the target.

Before converting, if we already have another Firewall Policy we want to have as a parent to our current policy, we can proceed to add that to the Azure Firewall policy before. Either we can do this Portal, or handle it via code. Open up the ARM template in VS code and under “Microsoft.Network/firewallPolicies” resource, under properties, add the following line. Example code

{

"$schema": "https://schema.management.azure.com/

schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters":{

..

"basepolicy": {

"type": "string",

"defaultValue": "resourceid"

}

...

},

"variables:{},

"resources":[

..

"basePolicy": {

"id": "[parameters('basepolicy')]"

}

...

]

}

]

}Go live

Converting an existing Azure Firewall to Firewall Manager is none-disruptive operation. How this fit in your change regim, may differ since Azure Firewall more often then not is a central component in your deployment and because of that requires a change window. However from a technical standpoint, there is no traffic lost in the network tests I done during the convert.

To convert a current Azure Firewall can either be done thru the Portal and follow the guide

If you already have Azure Firewall handled via code, it is three things we need to do (ARM template as example).

- Remove all current Azure Firewall rules in the ARM template

- Remove threatIntelMode setting

- Add the Firewall Policy (the one we converted) resourceID reference

"firewallPolicy": {

"id": "resourceIDofFirewallPolicy"

}Now, the configuration is ready for deployment. If there is an already pipeline, use that. In this example case, we deploy via Powershell

New-AzResourceGroupDeployment -Name "1" -ResourceGroupName´ "firewall-rg" -Mode Incremental -TemplateFile´

.\firewall.json -TemplateParameterFile´

.\firewall.parameters.json -VerboseThe convert usually takes the same amount of time as an regular Azure Firewall rule update. One thing noted is that if you have IP groups as reference, it usually take a bit more time.

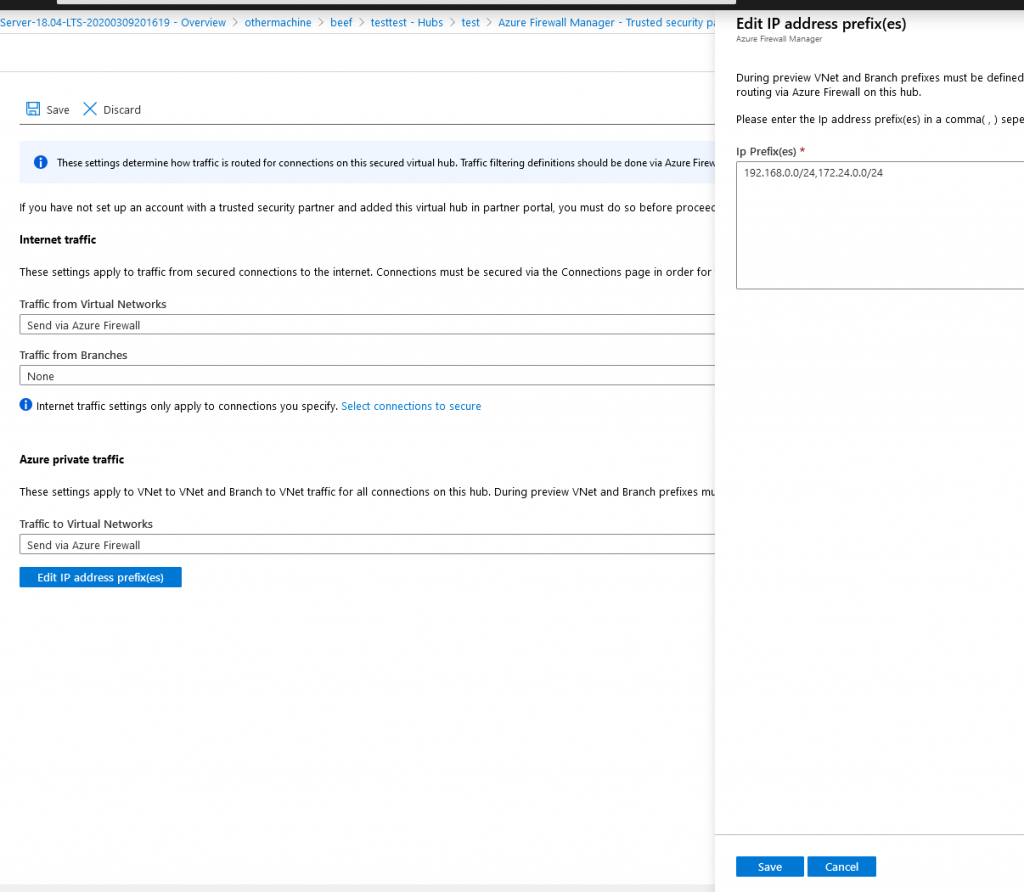

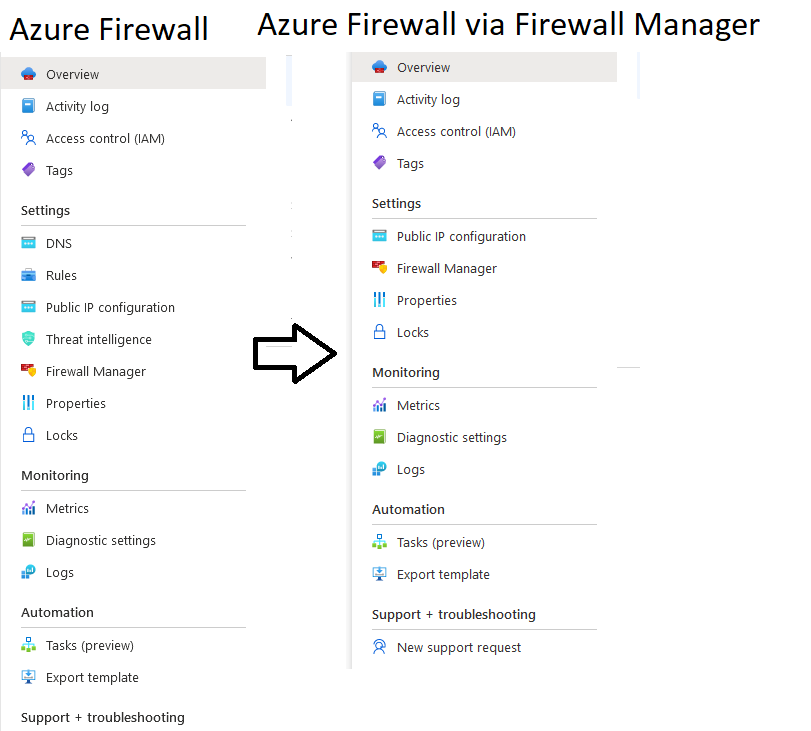

After deployment is complete, we can see the difference in two parts in the Azure Portal. On the Firewall object itself we see a difference that we have fewer options on Settings

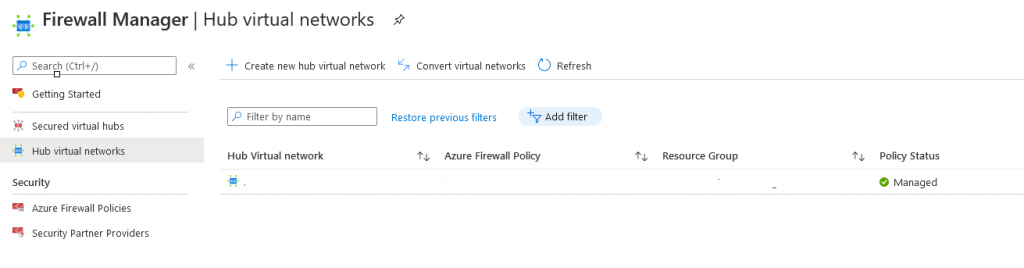

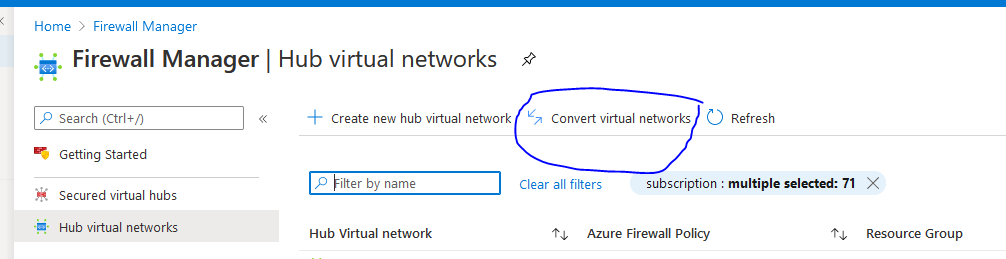

The settings are now moved to the Firewall Manager, where we can see it under “Hub virtual networks”